If you like ASL and you would like to use more Real-Life handshapes in VR, this system is for you.

If you want specific inputs to trigger specific poses, this system is also for you.

[TODO: Add Video of the System in action]

Resonite added a way to pose fingers, so I immediately jumped on the opportunity to make an ASL gesture system.

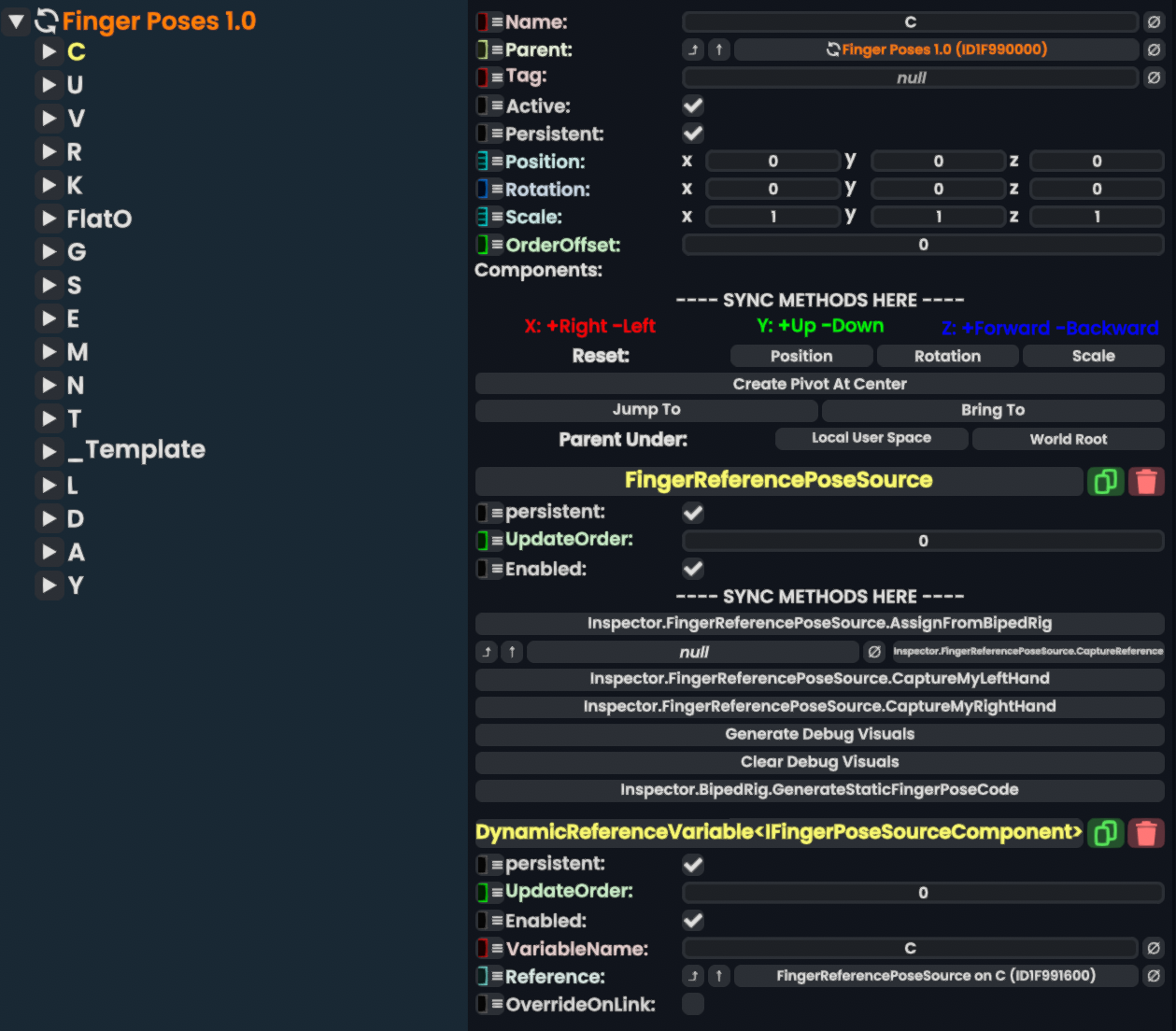

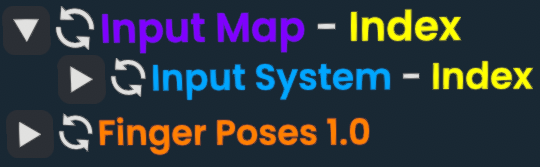

I tried making it as modular as possible, so it consists of 3 systems, that are all independent of each other:

The FingerPoses exposes the poses, the Input System exposes the current Gesture's "ID".

The Input Map maps the Gesture IDs to the Pose Names.

And the System I made uses these to drive the poses to your avatars fingies.

So you can add your own poses, and map them to your own gestures,

or just edit the current poses to your liking.

And if you would like a different input system, you can just replace the Input System with your own.

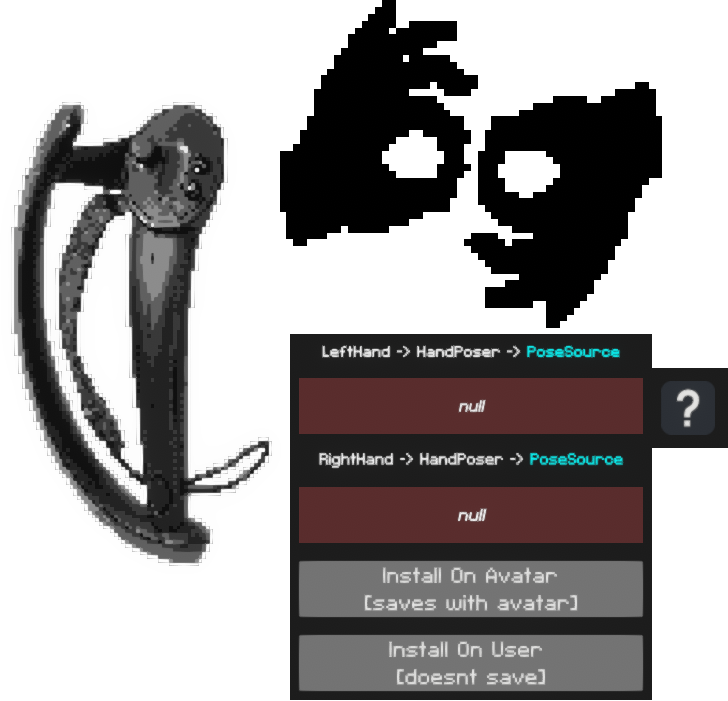

As a "test" I made a TouchController Input System, and a Input Map that maps the TouchController's inputs to the poses.

I also published that along the Index one in my folder.

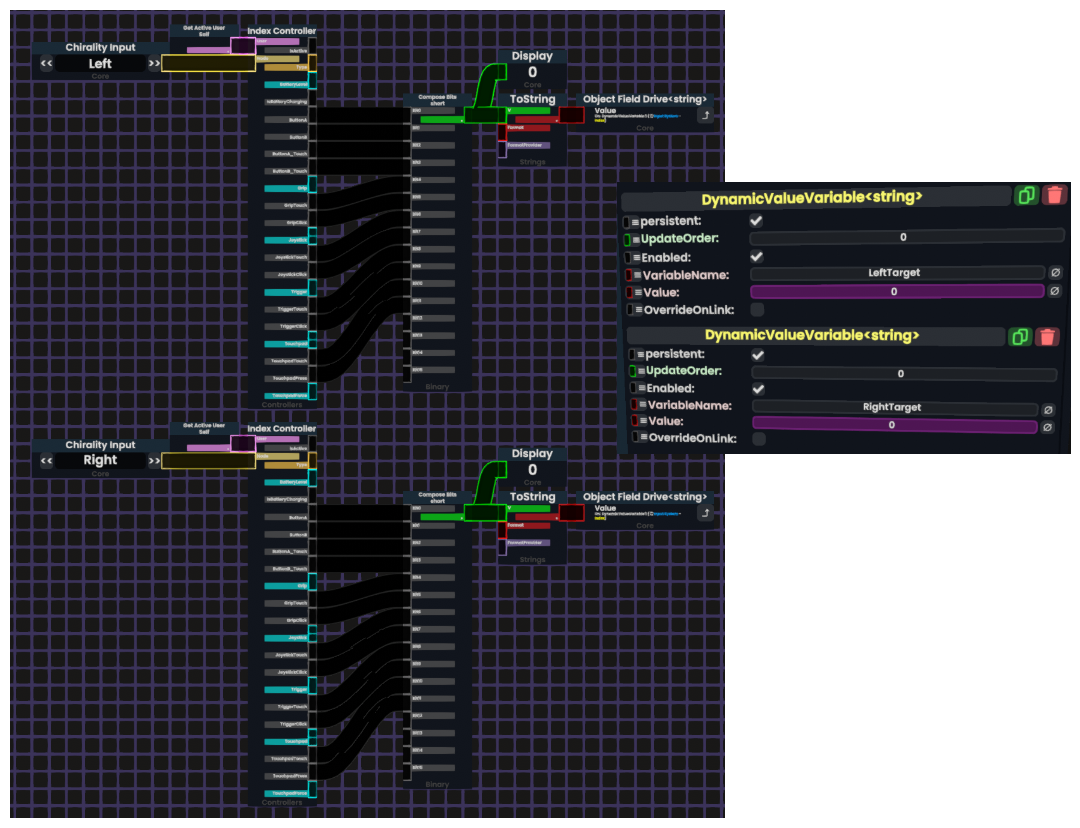

As you can see I put Input System under Input Map, since the Input Map maps the System's outputs, to Pose names.

So they are only functionally independent, but usually they should be used together.

The Input System should expose two Dynamic Variables: LeftTarget, and RightTarget.

These should be driven as unique values depending on the Input you are trying to map.

You can easily just take a Controller Node and Compose a value from the booleans, like I did.

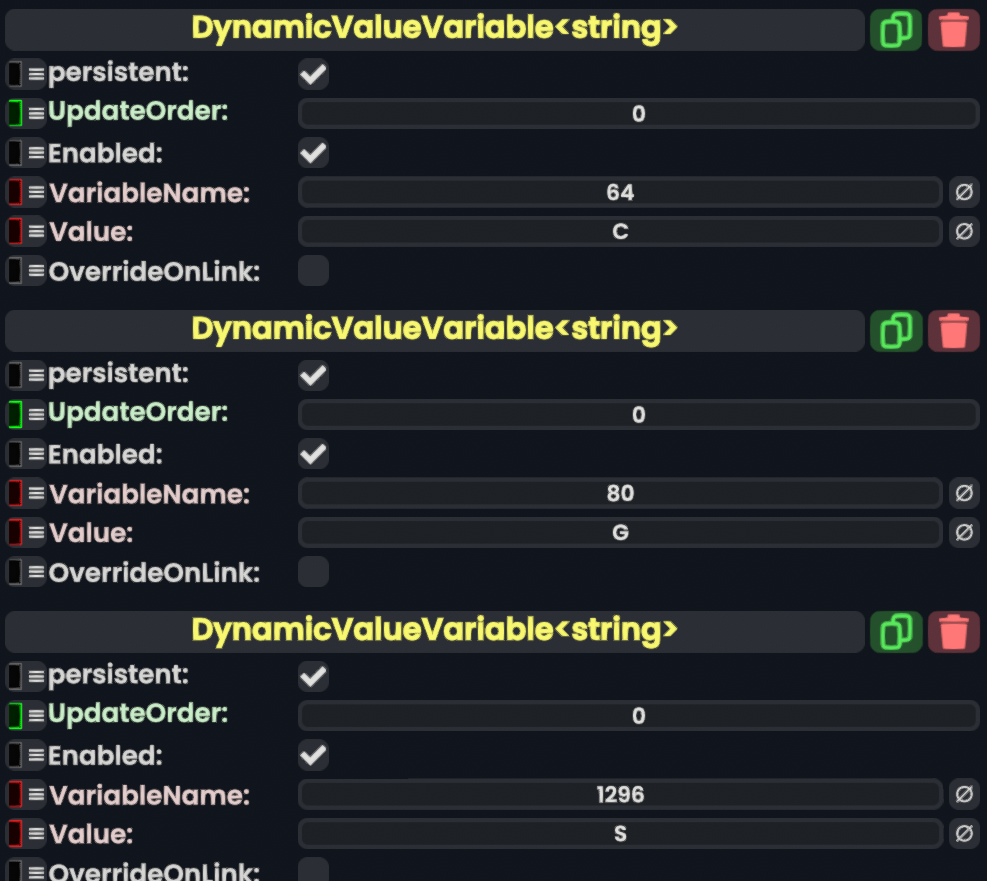

This is what it sounds like. It maps the Input Values from the Input System, to Pose Names.

These can be tweaked by making gestures, and using the value that the system shows you.

Heres where Resonite's FingerPose System comes in to play.

But its still very simple, you just need to expose the poses as Dynamic Variables.